inv (a, signature =signature, extobj =extobj ) 552 return wrap (ainv. columns ) ~/miniconda3/envs/phoenix/lib/python3.7/site-packages/numpy/linalg/linalg.py in inv (a) 549 signature = 'D->D' if isComplexType (t ) else 'd->d' 550 extobj = get_linalg_error_extobj (_raise_linalgerror_singular ) -> 551 ainv = _umath_linalg. T y ) 3 4 # Label parameters with feature names 5 pd. in 1 # Compute parameters of OLS model -> 2 OLS_theta = np. LinAlgError Traceback (most recent call last)

Because we're working with a continuous target variable, we'll create a linear regression model.

Let's generate a toy dataset with three variables the third column serves as the target variable while the remaining are categorical features.

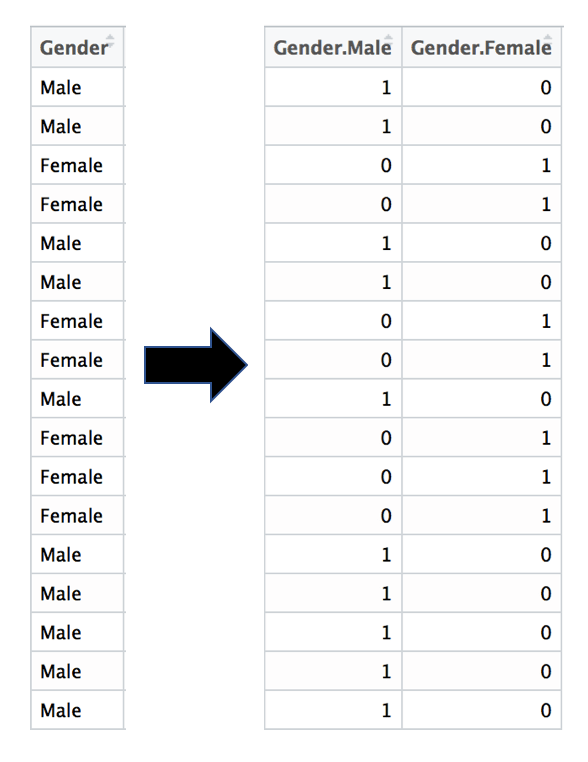

For example, the feature sex containing values of male and female are transformed into the columns sex_male and sex_female, each containing binary values. Machines aren't that smart.Ī common convention after one-hot encoding is to remove one of the one-hot encoded columns from each categorical feature. Many machine learning models demand that categorical features are converted to a format they can comprehend via a widely used feature engineering technique called one-hot encoding.

0 kommentar(er)

0 kommentar(er)